Quality SEO goes a long way towards making life easier both for web admins and search engines. When creating easily discoverable and interactive content, we get higher SERP rankings. And if front-end development is in the golden age, then technical SEO and JavaScript (JS) is in the renaissance era.

About 80% of popular eCommerce stores in the US use JS. These sites load quickly despite different code execution processes, are accessible to users with outdated browsers, add interactivity, and improve user experience. However, JavaScript greatly complicates crawling tasks - very often leads to lower rankings or even indexing impossibility. A single mistake in the code can cause Googlebot to fail when parsing the page.

SEO specialists don’t need to learn JS much - their goal should be rather an understanding of scanning and rendering principles, which can later turn it from a hindrance into an ally. In this article, we’ll talk about facilitating crawling content and avoiding problems arising from indexing.

Table of contents

What Is JavaScript SEO?

Without a doubt, JavaScript is the latest trend and might be the future of the Internet. It’s a modern programming language for different applications and websites. In terms of the sites, its goal is to increase the visibility of pages in the SERP due to improved page speed (if optimized correctly). It stands next to HTML and CSS:

HTML defines an actual web page content;

CSS is responsible for the interface;

JS offers a high level of dynamism and interactivity.

Note: JavaScript can manage HTML changes.

JS allows you to update the page content quickly, add animated graphics and sliders, interactive forms, maps, games, etc. E.g., on Forex and CFD trading websites, it’s utilized to update exchange rates in real-time; otherwise, visitors would have to adapt the page manually.

The type of content it usually generates can be divided into the following groups:

Pagination

- Internal links

Top goods

- Ratings

- Comments

- Main content (rarely)

Note: create pages for users, not search engines. In particular, check the site's accessibility with the Google SERP position checker. In addition, you’ll be able to measure the performance of your web pages in various search engines, including Google, Bing, Yahoo, Yandex, etc.

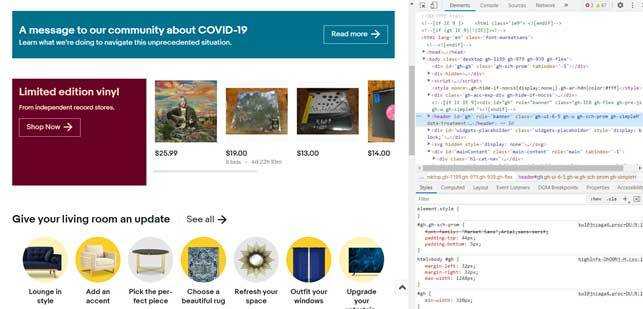

Websites are often built with frameworks like Angular by Google, React by Facebook, Vue.js by Evan You, and Polymer by Google and contributors that allow you to develop and scale interactive web applications quickly. E.g., Angular is a reasonably popular framework that looks like a regular web page when viewed in a browser.

Note: utilize BuiltWith or Wappalyzer to identify if your site is JavaScript-based. They’ll help you examine the items and see the source code. You can also apply a browser plugin like Quick JavaScript Switcher in Chrome or JavaScript Switch in Firefox. If some page components have disappeared, then they were created using JS.

The source code isn’t always sufficient to understand dynamic content updated with JS. To do this, it’s worth exploring the Document Object Model (DOM): right-click → Inspect element.

Optimizing your site is essential to improve crawling performance. SEO JavaScript is an area of technical SEO that provides individual tasks to simplify crawling and indexing processes by JS search engines. Nevertheless, it’s pretty easy to make a mistake when working with such sites: you’ll have to go through numerous arguments with the developers, proving an error.

Examples of popular websites that utilize JavaScript:

Google

The most famous search engine with a significant market share is built on JS. By the end of 2021, it’s estimated over 1.6 billion users will visit it every day!

Facebook and YouTube

Platforms use JS and other stacks. In terms of the received traffic volume, they’re rightfully ranked second among the most popular sites, and the number of visitors is 1.1 billion.

Wikipedia

The encyclopedia with a simple design and no display ads is also built on JS, PHP, etc. About 475 million users read it.

eBay and Amazon

These are online shopping resources that set the tone for the entire eCommerce market. Along with Java, Oracle, Perl, they also use JavaScript.

Is JavaScript Good or Bad for SEO?

This question is still rhetorical and causes a lot of controversies.

JavaScript is vital for creating scalable and simple to maintain resources. Google and Bing have made recent JS-related announcements - they've identified interoperability improvements. For instance, Google started utilizing the new Chrome version for rendering web pages with implemented JavaScript, Style Sheets, etc. Bing is also not far behind and has adopted the latest version of Microsoft Edge as the Bing Engine for rendering: similar to the technologies used in Googlebot and Chromium-based browsers, Bingbot will also render all pages.

Regular updates will provide adequate support for the latest features, and this will be a real “quantum leap” from previous versions! For website owners, this means saving money and time in executing each solution, making it easier to get their sites and web content management system running in both browsers. That doesn’t apply to files prohibited in robots.txt.

Plus, you don't even need to switch to Bing or to keep Google Chrome 41 close at hand to test Googlebot. There is no need for a compatibility list where JS functions and stylesheet directive work per search engine is specified.

JavaScript is ahead of traditional HTML, PHP, and similar ways of implementing sites with its loading speed, user interaction with web pages.

Of course, not everyone is used to JS - working with it requires additional training, and some are inclined to abuse it. Such a programming language is imperfect and not always suitable for work: it cannot be parsed gradually like HTML and CSS. Furthermore, some implementations often negatively impact page crawling and indexing, damaging crawler visibility. Remember, sometimes you have to choose between performance and functionality. Another question is which of these options is more important to you.

Using JavaScript in conjunction with other frameworks creates several SEO problems in:

Visualized content

All modern web applications are built on frameworks. For example, Angular, which we mentioned above, and any other platform offer the code utterly bereft of any content: there is only an application skeleton and several tags associated with introducing important items into the DOM through JS.

That can be a severe problem for search robots that cannot crawl content, and for an SEO professional and marketer, it promises to be ignored in favor of rivals.

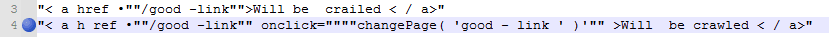

Internal links

JS can affect Google's ability to crawl the discovered links. Therefore, all guides strongly recommend embedding href attributes in HTML anchor elements and using descriptive anchor texts for the hyperlinks.

Note: the div and span attributes and the JS event handlers are "pseudo" links and aren’t crawled. Therefore, it’s better to store links as static HTML components.

Suppose crawlers fail following the links to the site's key pages or to the new content (outside the XML sitemap). In that case, most likely, essential internal links indicating to them are missed or incorrectly implemented.

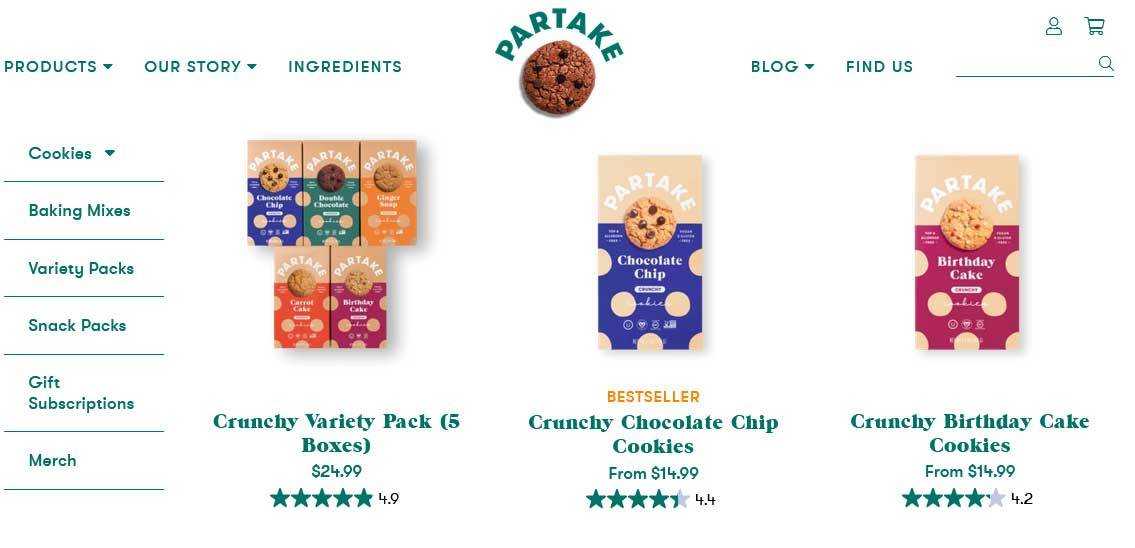

Lazy-loading images

Have you ever wondered why Googlebot doesn’t process visual content at times? The point is that it supports lazy-loading but doesn’t "scroll" as a human does when navigating to a specific webpage. Instead, it resizes its virtual viewport to be longer, and hence the "scroll" event listener is never triggered.

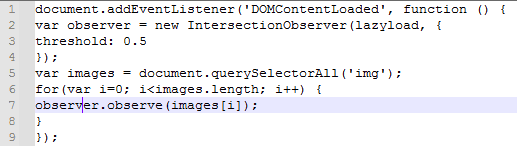

A screenshot demonstrates that API Intersection Observer triggers a callback when any observed unit becomes visible. In this case, it’s an on-scroll event listener and is maintained by modern Googlebot.

Such an attribute is still tentative, but you can utilize it in the Google Chrome browser. At worst, it can be ignored by the bot, but the images will still be loaded.

Note: lazy-loading pictures on eCommerce websites that display multiple rows of product listings make available speedy interactions for visitors and Google bots.

Lazy loading scrolling in itself isn’t the best strategy to rely on: sometimes you can miss moments when the content should become visible, but it doesn't. Let's say people who watch soap operas while working open a tiny window where a video is displayed, use half the screen, or something like that. Then, they can resize the window so that it triggers resize events but not scroll. Therefore, it’s worth utilizing several solutions.

Page load time

As you know, loading speed is a ranking factor for smartphones, and if the website is so slow, it can cause inconvenience not only for users but also for search robots. Google delays loading JS and reduces crawling frequency to economize resources, so it’s essential to ensure that any content served to clients is encoded and transmitted efficiently. A slow webpage can hurt rankings, so experts often take the following practices to make JavaScript SEO friendly:

Reducing the amount of embedded JS code;

Postponement of non-critical JavaScript until the main content is rendered in the DOM;

Embedding critical code;

Maintenance with fewer workloads (apply, for instance, Load Impact (k6) for this goal).

Metadata

SPAs (single-page applications) use a Router Node.js package as Vue-meta or React-meta-tags to process modified meta tags when navigating between router views where the components are rendered. They operate in React as follows:

When the user visits the site, a GET request (it's applied to extract and request info from an indicated server resource) is forwarded to the server for the ./index.HTML file;

The server transmits this file and the React and React Router code to the client;

The application is loaded on the client-side;

If the visitor follows a link to another webpage, a unique URL request is also redirected to the server;

React Router takes the request before getting to the server, updates React elements locally, and handles URL modifications.

Essentially, the React framework units in the basics ./index.HTML file (headers, footers, and main content) are reorganized to demonstrate the new content. Hence, the name "single-page applications" has come from.

Note: React Helmet provides specific metadata for all webpages or "view" when scanning SPAs. If not used, then most likely, search engines will crawl identical metadata for every web page or not bother with this process at all.

Do or Don't?

Ensure you understand the technical implications of embedding JavaScript: if it has a purpose on the webpage, it’d be used. E.g., Google is interested in indexing content to satisfy its customers. Therefore, since the code allows you to speed up page loading, it'll positively affect conversions and reduce the bounce rate. Otherwise, site visitors won’t be comfortable working with it; they can leave it.

It’s best for SEO professionals not to implement JS if the HTML text content and formatting look good from the UX perspective.

How Does Google Handle Javascript

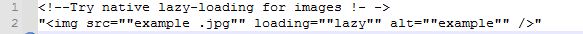

Googlebot handles JavaScript using the latest version of Chromium and involves three main processesю This is how Google represents them:

Crawling

First, Googlebot scans the URL and checks if the page is allowed to be processed. Then, it reads the robots.txt file, and the following events can develop in two ways:

If access is denied, it sends an appropriate HTTP GET request to the server, usually utilizing a mobile user-agent, and skips that URL.

URLs specified through the href attribute of HTML links are added to the crawl queue.

Note: if you don’t want the crawler to follow the links, implement the nofollow attribute.

Google then decides what resources are needed to display the content. But, of course, pure processing power to download, parse, and execute JavaScript in bulk is massive, so only static HTML is scanned without any CSS or JS-related files.

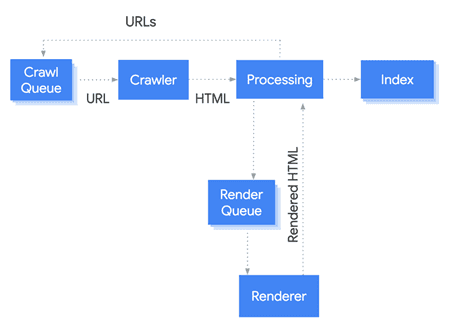

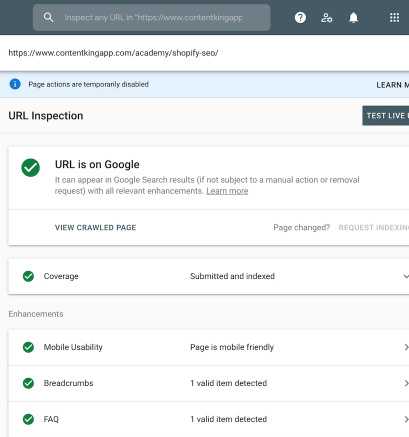

You can check how Google is crawling your site using the URL Inspection Tool (Crawled as → a type of indexing on devices (mobile or PC)

That’s necessary to understand which countries or visitors won’t be able to see your content. So, accordingly, it won’t be presented for Googlebot either.

Some sites may utilize user-agent detection to be read by a specific crawler. At the same time, it’ll see different content than the user. Therefore, using the Mobile-Friendly Test and the Rich Results Test to make JavaScript SEO friendly is essential.

Rendering

A render is where Google renders a page to understand what the user is seeing. JS and any changes it makes to the DOM are processed. The crawler cannot perform user scenarios like clicks, filling out forms, and other interactions with elements on the page. Therefore, all the critical information that will be indexed must be available in the DOM. Google uses Chrome 41 on the Chromium 74th engine for rendering - it’s evergreen and constantly updated.

Googlebot queues all pages for rendering, of course, except for those that use noindex, nofollow. Sometimes pages may be queued for a few seconds, and sometimes they may not be rendered for several days or even weeks. The thing is that the robot must have enough resources to process it, after which it receives a rendered page with executed JavaScript from the browser and parses its HTML. Further, indexing occurs, and all links from the URL found on this page are also queued for scanning.

Indexing

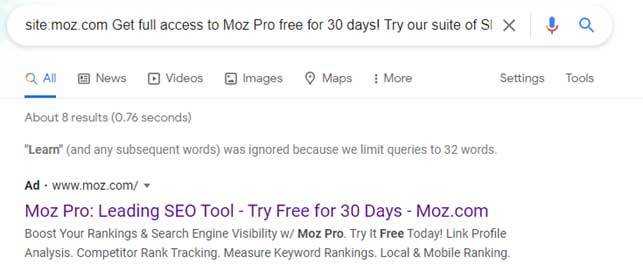

If the bot completed the previous steps, then the indexing process starts. To test it, besides the mentioned URL Inspection Tool in Search Console, the easiest way is to enter a piece of JS-loaded content into the search field. You can also apply the site: {your website} "{fragment}" command.

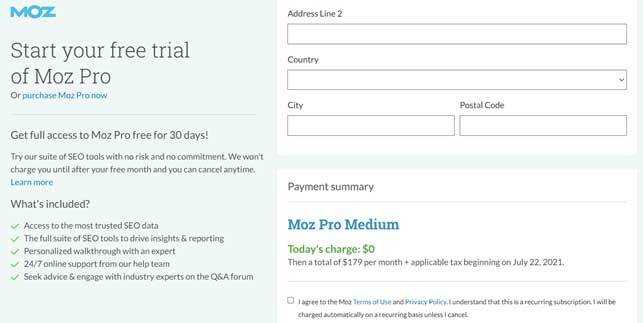

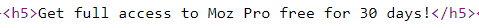

E.g., on the Moz website, part of the content is loaded on click using JS.

Let's try to check if this text gets into the index. You can see the desired fragment in the search results, which means that the data has been indexed.

Here we would like to note that such search queries are recommended to be performed anonymously to get the correct result - the problem is that sometimes old texts can be indexed.

Moreover, it’s also implemented within the HTML framework and found in the document's source code.

That’s the reality of big brands, Nike and Sephora index from 78% to 45% of the content, respectively. So such a scatter only confirms that it’s worth making it easier for Googlebot and WRS to access scanning and rendering.

Note: check out the Web Rendering Service data to understand denying permissions, stateless, flattening light DOM, and shadow DOM.

If Google cannot see an essential piece of content on some pages, this may affect its ability to rank high in SERP. The situation can get worse if Google doesn’t scan links to related products.

Understanding Rendering

Whether or not you deal with spaces in JavaScript elements indexing, it also depends a lot on the site's rendering of the code. That’s why it’s worth knowing the distinction between Server-Side Rendering (SSR), Client-Side Rendering (CSR), and Dynamic Rendering. Remember we said that sometimes you could go through 9 circles of hell, proving to the developer that there is an error in the code? But, of course, you can prevent any controversy if you recognize several rendering methods. That’s the most critical part you’d understand for Google SEO JavaScript.

Server-Side Rendering

Server-side rendering is when JavaScript is shown on the server, and the rendered HTML page is transmitted to the client (a bot, browser, etc.).

Crawling and indexing are identical to HTML, so there shouldn't be any troubles with JavaScript.

How it operates:

The user visits the site;

The browser requests the server with its contents;

The request is processing;

Rendered HTML is demonstrated on the screen.

SSR is often quite a difficult task for a developer, but to implement it, Gatsby and Next.JS (for the React framework), Angular Universal (for Angular), or Nuxt.js (for Vue.js) are still utilized.

Advantages of server-side rendering for SEO:

The page loading speed is high, so crawlers can quickly move to the next page. That improves the crawling budget and user experience.

You can make available a rendering of each page element. Accordingly, you’re relying on browser technology, and if it’s outdated, it’ll result in partial rendering.

Disadvantages:

It cannot render content that isn’t a part of your static HTML (e.g., comments, UGC, recommendation mechanisms).

As the website is displayed on your company's servers, so the process is costly. And in this case, you must pay for them.

Client-side rendering

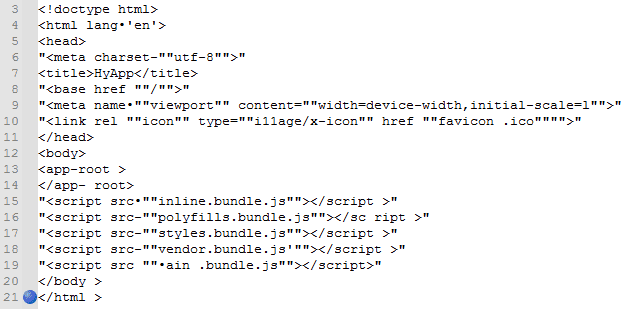

To a large extent, CSR is the opposite of SSR: here, the client processes JS using the DOM. As an alternative to getting all the content from the HTML file, you end up with a bare-bones HTML with a JS doc that extracts and collects the content in the browser. When you look into the source code of such sites, you’ll see basic HTML, div tags, and links to JS.

There will also be no page content: headers, texts, reviews, pictures, etc. So the bot will also not be able to see it. That’s bad because, without these essential components, website promotion in search engines is impossible.

Advantages:

Rendering costs are borne by the client and therefore lower-priced for the company than the previous variant.

Decreases the load on your servers.

That’s the default condition for JS sites, making client-side rendering uncomplicated.

Disadvantages:

CSR can enlarge the probability of a bad user experience: page load time can increase. That provokes a spike in the bounce rate.

Impact on bots: Occasionally, JS content can be lost and not implemented in the index during the second wave of indexing.

Note: the second wave of indexing involves the process of first crawling and indexing the HTML code and then returning it for JavaScript rendering when the Googlebot has enough resources.

Dynamic Rendering

That’s an alternative to SSR and a viable solution for serving a JavaScript site to users. It’d be understood as follows: sending CSR content to searchers in the browser and SSR to the crawlers. By the way, Bing recommends using prerender.io, Puppeteer, and Rendertron. So, SEO JavaScript dynamic content that users and crawlers see will be identical but differ in the level of interactivity. Please find out how to set it up here.

Pre-rendering is utilized to promote the site in search engines: it caches a page with HTML and CSS. Then the client is checked - if it’s a Google bot, then it processes the cached page. If the user - then the page is loaded as intended.

Note: not all dynamic rendering is used for cloaking; the content will be similar in this case. An exception is when completely different content is served.

Advantages:

Dynamic rendering is relatively inexpensive as you only pay for bots pre-rendering.

Search engine robots get your content without having to render it. That’s an easier and less resource-intensive way to give bots what they want.

Dynamic rendering signifies the robot loads web pages faster, resulting in more indexed and ranked pages, and increased traffic and income.

Disadvantages:

It works great as a temporary solution since JavaScript processing becomes hard, and not all crawlers can process it.

Cloaking dynamic rendering.

Common Errors with JavaScript SEO and How to Fix Them

The answers can be very different, as well as their solution. To get started in Google Search Console, do the following steps:

Scan the page and analyze how the bot sees the page and its source code;

Utilize the site search operator to identify the indexing of individual items;

Use Chrome's built-in dev tools, which quickly diagnose problems.

Problem # 1: Blocking .js in robots.txt may avert sites from crawling.

Solution: Permit these files to be crawled.

Problem # 2: Often, the crawler doesn’t wait for JS items to be displayed if the loading time is too long. Therefore, the page may not be indexed.

Solution: Solve the timeout error.

Problem #3: Customizing pagination where links to pages beyond the first are only made by click. That will make it impossible to scan because the bots don't understand buttons.

Solution: Utilize static links to assist Googlebot in detecting web pages.

Problem #4: Lazy loading page utilizing JS.

Solution: Try not to defer the loading of indexed content (e.g., images).

Problem #5: CSR and SSR code can't return server mistakes.

Solution: Redirect errors to a page that go back to a 404 status code

Problem #6: the presence of unnecessary characters in the URL (for instance, #) makes it impossible to index. The difference is obvious: domain.com/pagename is correct and domain.com / # / pagename or domain.com # pagename is incorrect.

Solution: Create static URLs.

Of course, it’s worth considering that indexing problems can also occur in the case of unoptimized content. We'll talk about this further.

Making Your JavaScript Site SEO-friendly

On-page SEO

Rules for content, title tags, meta descriptions can be repeated, omitted, or multiplied; alt attributes for images can be set in a chaotic order, and noindex directives can be added to pages prohibited in robots.txt, etc. Therefore, you’d stick to the JavaScript SEO best practices described here.

Duplicate content

That’s an ensuing problem from the previous one. In JavaScript, duplicate content can have different URLs. The root cause is an incorrect case of letter use or IDs, etc. E.g.:

your-website-domain.com/Efg

your-website-domain.com/efg

your-website-domain.com/456

your-website-domain.com/?id=456

To fix this, set the only one version to be indexed and add canonical tags.

Allow crawling

The crawler must have access to resources to display them in the SERP correctly. So choose Allow: .js and .css for Googlebot.

SEO "plugin" type

For frameworks, these options are also called modules. To set popular tags, utilize the search: framework name + module. E.g., “React Helmet.” Often, their functionality is similar and focused on the needs of a particular application.

Sitemap

JavaScript frameworks usually have routers with an add-on module to generate a Sitemap. To find them, enter the construction: "framework name + router sitemap." E.g., Vue router Sitemap.

URLs and History API

We’ve already talked about the fact that you shouldn’t use hashes # in the URL. That’s a big problem, especially for Vue and some earlier versions of Angular. To fix this error, you need to change the mode to ’’History’’ together with the developer. Then, apply the History API instead of fragments so that Googlebot can find links.

Lazy loading

How to make JavaScript SEO friendly? First, use modules to handle lazy loading. For example, Lazy and Suspense are the most popular. We recommend using lazy loading for images to download the moment the user sees them.

404 Page Not Found

Since frameworks aren’t server-side, such errors are common. So use a redirect to a page that responds with a 404 code. You can also add a noindex to a page like this, and the current status code returned will be 200.

Conclusion

SEO for JavaScript pages is a pretty complex topic, but we hope this guide was helpful to you.

Of course, the question of implementation leaves you the right to decide whether you need it on your site. Knowing all the advantages and disadvantages, you’ll better understand how to handle content and eliminate user experience problems. To fully master JavaScript will take a little time and a lot of work. But as they say, diligence is the mother of success!