Google MUM or Multitask Unified Model is a new technology for answering complex questions that have no direct answers. It was introduced just a few weeks ago and has already created a stir in SEO circles. It has yet to be confirmed whether the search engine uses this algorithm, if not, when it will be implemented and how it will affect the SERP rating.

Similar to BERT, it's built on a transformer architecture, but getting detailed answers to complex queries is much easier here. Google uses an example of finding preparation for climbing Mt. Fuji next fall. To understand what new to expect or what equipment to choose, the complexity of the routes, and temperature fluctuations, you will have to conduct a series of thoughtful searches. In this query, the answer a priori cannot be unambiguous but, on the contrary, is detailed and considers many factors. Of course, this example is universal - every day, users deal with finding answers to complex problems while performing an average of 8 queries to solve each of them. MUM technology is designed to help with such complexities and reduce the number of searches. Moreover, it is 1000 times more powerful than BERT!

In this article, we will discuss the features of the technological miracle MUM and look at its impact on SEO.

Table of Contents

What is MUM?

Google MUM is an algorithm that helps solve complex queries: it understands 75 different languages and can generate them. Incredibly, unlike BERT, it simultaneously performs many tasks and develops a complete understanding of information. Interestingly, MUM is multimodal - it scans data not only from text but also images. It is predicted that the number of modalities will expand to video and audio files in the future.

Let's go back to the example of climbing Mt. Fuji in the fall. The answer, in this case, will consist of several stages. If we are talking about hiking, then most likely, preparation for them may include fitness training and the selection of suitable equipment. Since fall is a stormy season, a waterproof jacket is indispensable. In addition, the algorithm can show the recommended subtopics revealed in videos, pictures, or articles: for instance, the best exercises or top outfits.

To date, no research papers or patents are describing the Google algorithm. There is also no trademark called MUM. Despite this, only some studies discuss such search problems that can be solved using multitasking technology. Consider them!

How Does Google MUM Work?

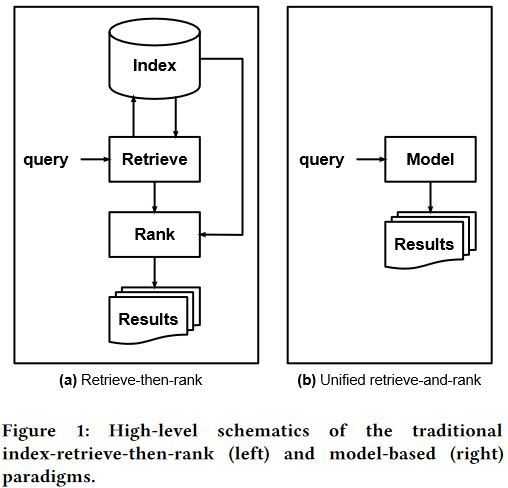

The Google explorer, Donald Metzler, announced an article explaining that Google search algorithms must take a newly discovered way to provide detailed decisions to entangled questions. The Information Retrieval process is the backbone of every modern search engine, but indexing and ranking sites are not entirely adequate for such tasks. All search engines use recognition of keyword phrases conjunction and semantics to create a preliminary list of candidates for search results. Then it goes through one or more re-ranking patterns, which are probably to be neural network-based learning-to-rank.

According to Metzler, such a procedure removes the algorithm component responsible for finding the index: it is designed to combine and substitute indexing, retrieval, and ranking elements into a Unified Model. It encrypts the information kept in a corpus. To understand what this is about, consider the following screenshot from the article: it shows the dissimilarity between the conventional retrieve-then-rank and the Single Model.

There are apparent coincidences in this study release, announced in May this year, describing the importance of a single representation in the modern world and the release of a breakthrough Google algorithm called MUM.

Interestingly, in December 2020, another study was published, telling general details about Multitask Mixture of Sequential Experts for User Activity Streams or MoSE. Although the MoSE and MUM names are similar, some overlaps with the MUM breakthrough are also observed here. Guess who’s the creator of this work! Still the same Donald Metzler. An article considers neural multitask learning that utilizes scanning and browsing history to build powerful multistage scanning patterns. The algorithm studies successive mouse clicks and info scanning to simulate finding relevant responses to specific questions. It’s noteworthy that MoSe models the researcher's behavior utilizing Long Short-Term Memory (LSTM) and not a request and context - the course of actions performed on the network makes it clear which response will be satisfying. Also, in this document, there is a mention of an algorithm type optimized to perform video search based on three predictions:

- what the people want to see;

- what will satisfy a request;

- which materials get more engagement.

MoSe is primarily interested in a variety of data types. If we consider this feature in the context of MUM, then we can understand what actions the crawler is taking to discover appropriate options. In this case, the flows of client activity from various sites are modeled, and their interaction is determined. In addition, MoSE can forecast serial client searches and behavior as obvious representations, subsequently reducing the number of search queries performed (8) and better responding to complicated problems.

The fewer computational resources are required for the algorithmic program’s operation, the more potent it can be, giving it more opportunities for scaling. Here is the decision to the statement that MUM is 1000 times stronger than BERT. MoSE provides an equilibrium between minimum resource value and scanning quality. The inventors proved this experimentally: they tested an algorithm architecture and compared it with seven alternatives. Using Gmail as an example, they demonstrated the system's flexibility and efficiency.

Note: the resource intensity of the famous Penguin until 2016 admitted it to be launched only a couple of times a year! Soon it could operate in real-time with a minimal cost.

The algorithm is robust to different levels of asset savings: when 80% is required, it saves about 8% more clicks. That makes it easier to retrain and gives it great versatility.

As BERT, MUM is designed on the Transformer architecture. MoSE doesn’t use it, but it can be expanded soon (here, you can use other modules, for example, LSTM, GRU). That essentially indicates MoSE can be a component of MUM.

Google releases many patents for algorithms every year, but this doesn’t apply to MoSE. If other studies are noted errors, then here, it’s more likely the system's accomplishment without the essential expenditure of assets.

Summary

MUM is an Artificial Intelligence (AI) technology. Its counterpart, MoSE, is presented rather as Machine Intelligence (MI). There is no difference between them, most likely - in the corporation's knowledge base, AI data is classified as MI. Of course, there's no way to say if MoSE mechanism is implemented in MUM definitively. Nevertheless, they are very similar. Although the scenario in which both systems are not intertwined is also quite acceptable. Interestingly, MoSE is a very successful algorithmic rule that can be enlarged with Transformers.

But no matter how the history of these algorithms develops, the most important thing is understanding how they will further affect the search.

How Google MUM Can Affect SEO?

SEO specialists must adapt every time a search engine changes its algorithms. A couple of years ago, when BERT was introduced, there was no significant impact on website performance. However, the emergence of super-powerful AI will affect it anyway. Describing a search query in simple, natural language will require sites to act similarly. Let's take a look at the possible impact of the Google algorithm on SEO.

Eliminating language barriers

The language becomes an obstacle for many users, for example, when it comes to websites that are not adapted to multilingualism. In this case, the algorithm can break these boundaries. It can study resources written in 75 languages and search for relevant information on them, even if you entered a search term in another language! Well, e.g., you are looking for info on the same Mt. Fuji. The search engine finds results, but in Japanese, which you don’t know. MUM will convert knowledge from these sites to your preferred language while allowing extended answers (where you can enjoy the best landscapes, what popular souvenir shops you can visit, etc.). That is, any information will be translated depending on the region from which the search is made.

Simultaneous understanding of multiple tasks

This approach allows you to understand the query better: there are two mountains, Adams and Fuji. Suppose you climbed the first one and are looking for information on climbing another one in the fall time. In that case, the algorithm can analyze the differences in geology while not considering the fauna and flora. Further, for the hike to be successful, excellent physical preparation is necessary, including training. But if we are talking about autumn, then it is worth searching for an outfit.

Language generation

Have you ever heard anything about GPT-3? It is a language converter capable of generating human-like text. Its quality is so high that it is rather difficult to distinguish from the real: this causes a lot of concern among researchers. Some even consider it dangerous, while others, on the contrary, tend to think that this is a miracle of miracles, the most incredible creation ever produced by man. So, comparing this system with MUM, the last one has all chances to gain leads. To begin with, it is worth understanding how the form of linguistic symbols is related to their empirical representation. A model inside a computer can "understand" the shapes of characters, but not their meaning (as a person perceives it). The corporation is trying to reduce the bias in search and reduce the carbon footprint with each invention.

Multimodality

That is a massive advantage of MUM technology compared to the GPT-3 and LaMDA. The fact is that the system can understand the information in different formats: for example, from pictures, videos, and text. So in his blog, Pandu Nayak described an instance when photographing your shoes, you can get the answer to the question: “Can I go to Mountain Fuji wearing them?” As a result, a picture will be linked to the text request, and you will be taken to a blog with a list of recommended boots and other equipment. After all, it’s convenient!

The importance of this aspect is better illustrated by comparison with the workings of the human brain. That is how we understand all the world's splendor thanks to the multi-sensory nature: vision, hearing, tactility, etc. The brain interprets the combined experience into a single view of reality.

Most events and even objects carry very different information. Imagine a lemon: you are familiar with its taste and smell, it has an oval form and a porous structure. Electromagnetic, mechanical, chemical information can be transmitted in the same way. Brain multisensor allows assessing multimodality. How interconnected everything is!

MUM will be the first unique algorithm to evaluate and combine data just like a human in terms of AI. We will talk about the ethics of this phenomenon further.

So, SEO may become unnecessary and obsolete because the rules of the game will change. Ever since the inception of Google, it has been trying to create the perfect search engine that can process queries naturally. When BERT appeared in 2019, it was described that it could mistakenly reproduce responses without adequately understanding the questions.

Note: by the way, one of the reasons for this is the “keyword-ese” structure input.

Naturally, a search engine assumes a unique style of communication, different from dialogue among people. Of course, we must pay tribute to BERT because it upgraded to an entirely new level to interpret key phrases. For this process to occur adequately, many utilize SpySERP checker, which can track keywords. Until MUM is implemented, there is no other way to optimize the search for users other than using SEO. BERT also lowered the influence of keywords on the site's ranking - it cares that the user's request matches relevant information, regardless of the exact terms.

We should expect a revolution in search engines, not excluding the fact that SEO will become obsolete. The algorithm will turn into a kind of personal assistant who will do all the work for you. Why would users try to match every word in the search bar when they can run a natural language query based on dialect? Maybe, the importance of the keywords won’t be as high as today, but they will still fill the content.

Note: MUM is not supposed to fill the text with terms, and the idea of content optimization is absent. If someone decides to deceive the search engine, then it will be doomed to failure. Pages made for crawlers will cease to exist.

Google's ethics regarding its inventions

The corporation conducts thorough research before launching any product on the market. Testing each search engine update by human evaluators is critical to delivering relevant results to their future users. For example, some recommendations help you understand how good the results of the algorithms are.

Like BERT, MUM will also have to go through an assessment process. The company is committed to looking for patterns that could indicate bias in machine learning.

As you know, large neural networks require enormous computing power to train. Google is committed to reducing the carbon footprint of systems such as MUM. That will significantly improve the search experience.

Algorithm release date

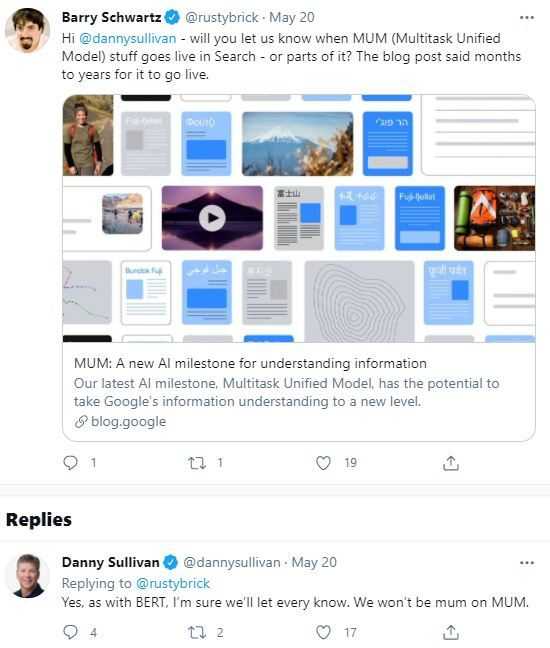

According to Barry Schwartz, the SEO of RustyBrick, he contacted the corporation on Twitter. Danny Sullivan wrote to him in the spirit of Google, letting him know as soon as MUM launches.

In turn, the company announced on its blog that the product could be added in the coming months, years. And although at the moment they are only studying its features and the project is at an experimental stage, it’s still a direct path to the future! Now the search engine will understand natural speech and interpret it as a person's brain, namely using multi-sensory and multimodality.

Note: Google is currently conducting pilot projects to understand the types of user requests better. That will come in handy for developing the MUM system.

Conclusion

Google announced its new MUM project at its annual developer event. The sensational topic has become a revolution in the world of search engines. Its strength lies in multilingualism, multitasking, and multimodality that works at the human brain level. Now not only the shape of the symbols will be perceived, but its context. So if Google can really read, hear and see content in 75 languages and then repackage it in a new format and in another language that is natural for the user, how will this be reflected in SEO?

Most likely, its launch will make a splash in the SEO world, incomparable with that in 2019, when BERT was made public. And if a strong influence on the performance of sites was not noticed before, then, in this case, key queries may not be taken into account. Well, at least so far, there is no data on this yet. So, queries will be entered in natural language, but the search engine results can be translated if necessary. Thus, users will receive the most relevant knowledge using super-powerful and, at the same time, not resource-intensive AI.

What remains for SEO specialists? Most likely to wait for news and, of course, have well-written texts. If they are easy to read and naturally optimized, then MUM will thank you for that. There is no stuffing with keywords to raise the rating, full compliance with users' wishes - this is what the system will pay attention to even more closely. In addition, while waiting for a breakthrough, continue to improve your product, focus on building brand preference - this will be reflected in its subsequent promotion.